|

| By Laurent Itti | itti@usc.edu | http://jevois.org | GPL v3 |

| |||

| Video Mapping: NONE 0 0 0.0 YUYV 640 480 0.4 JeVois DarknetYOLO | |||

| Video Mapping: YUYV 1280 480 15.0 YUYV 640 480 15.0 JeVois DarknetYOLO |

Module Documentation

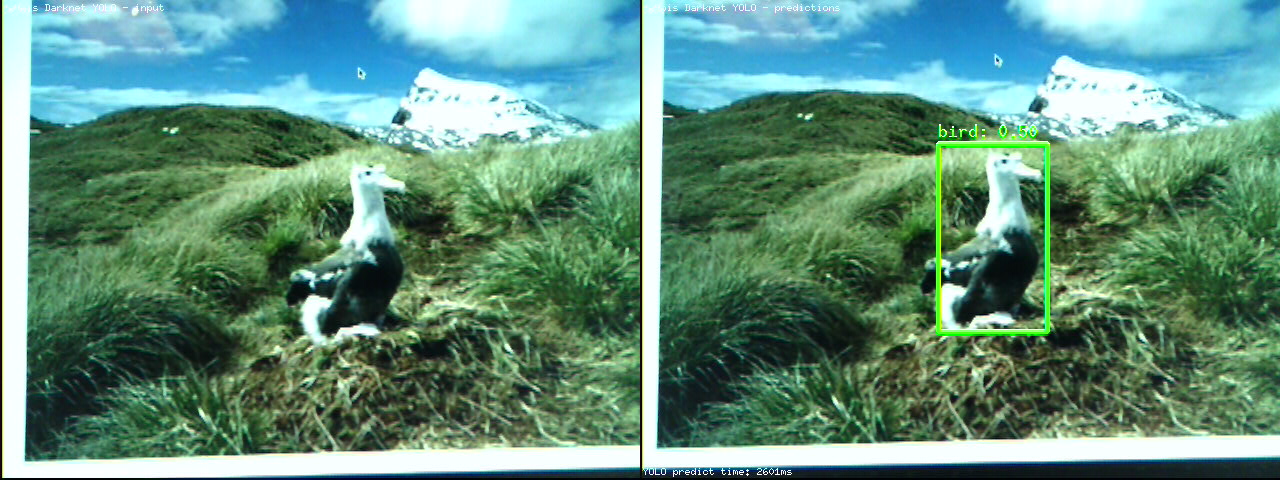

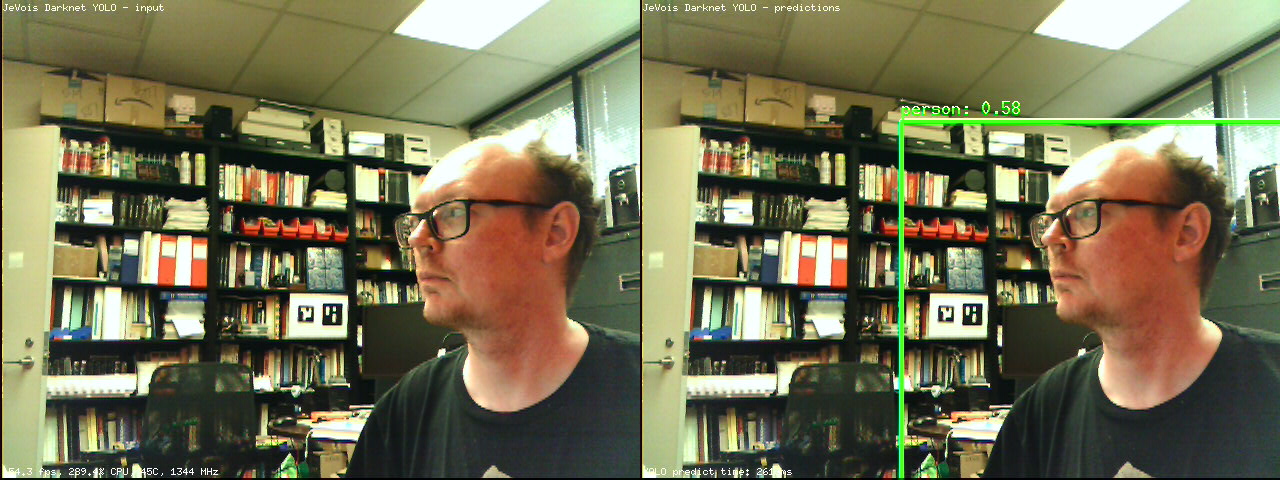

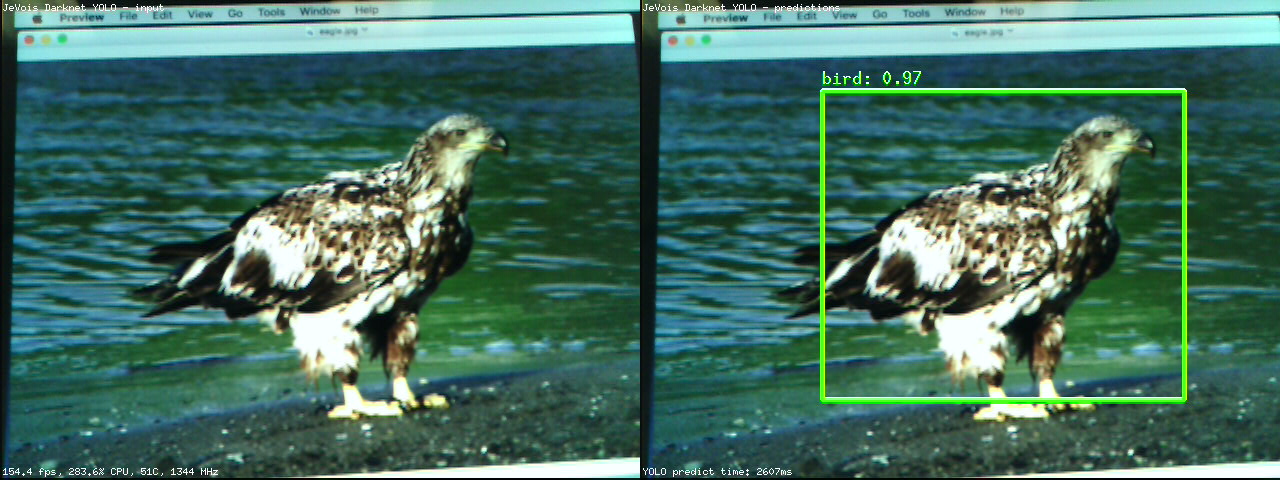

Darknet is a popular neural network framework, and YOLO is a very interesting network that detects all objects in a scene in one pass. This module detects all instances of any of the objects it knows about (determined by the network structure, labels, dataset used for training, and weights obtained) in the image that is given to it.

See https://pjreddie.com/darknet/yolo/

This module runs a YOLO network and shows all detections obtained. The YOLO network is currently quite slow, hence it is only run once in a while. Point your camera towards some interesting scene, keep it stable, and wait for YOLO to tell you what it found. The framerate figures shown at the bottom left of the display reflect the speed at which each new video frame from the camera is processed, but in this module this just amounts to converting the image to RGB, sending it to the neural network for processing in a separate thread, and creating the demo display. Actual network inference speed (time taken to compute the predictions on one image) is shown at the bottom right. See below for how to trade-off speed and accuracy.

Note that by default this module runs tiny-YOLO V3 which can detect and recognize 80 different kinds of objects from the Microsoft COCO dataset. This module can also run tiny-YOLO V2 for COCO, or tiny-YOLO V2 for the Pascal-VOC dataset with 20 object categories. See the module's params.cfg file to switch network.

- The 80 COCO object categories are: person, bicycle, car, motorbike, aeroplane, bus, train, truck, boat, traffic, fire, stop, parking, bench, bird, cat, dog, horse, sheep, cow, elephant, bear, zebra, giraffe, backpack, umbrella, handbag, tie, suitcase, frisbee, skis, snowboard, sports, kite, baseball, baseball, skateboard, surfboard, tennis, bottle, wine, cup, fork, knife, spoon, bowl, banana, apple, sandwich, orange, broccoli, carrot, hot, pizza, donut, cake, chair, sofa, pottedplant, bed, diningtable, toilet, tvmonitor, laptop, mouse, remote, keyboard, cell, microwave, oven, toaster, sink, refrigerator, book, clock, vase, scissors, teddy, hair, toothbrush.

- The 20 Pascal-VOC object categories are: aeroplane, bicycle, bird, boat, bottle, bus, car, cat, chair, cow, diningtable, dog, horse, motorbike, person, pottedplant, sheep, sofa, train, tvmonitor.

Sometimes it will make mistakes! The performance of yolov3-tiny is about 33.1% correct (mean average precision) on the COCO test set.

Speed and network size

The parameter netin allows you to rescale the neural network to the specified size. Beware that this will only work if the network used is fully convolutional (as is the case of the default tiny-yolo network). This not only allows you to adjust processing speed (and, conversely, accuracy), but also to better match the network to the input images (e.g., the default size for tiny-yolo is 416x416, and, thus, passing it a input image of size 640x480 will result in first scaling that input to 416x312, then letterboxing it by adding gray borders on top and bottom so that the final input to the network is 416x416). This letterboxing can be completely avoided by just resizing the network to 320x240.

Here are expected processing speeds for yolov2-tiny-voc:

- when netin = [0 0], processes letterboxed 416x416 inputs, about 2450ms/image

- when netin = [320 240], processes 320x240 inputs, about 1350ms/image

- when netin = [160 120], processes 160x120 inputs, about 695ms/image

YOLO V3 is faster, more accurate, uses less memory, and can detect 80 COCO categories:

- when netin = [320 240], processes 320x240 inputs, about 870ms/image

Serial messages

When detections are found which are above threshold, one message will be sent for each detected object (i.e., for each box that gets drawn when USB outputs are used), using a standardized 2D message:

- Serial message type: 2D

id: the category of the recognized object, followed by ':' and the confidence score in percentx,y, or vertices: standardized 2D coordinates of object center or cornersw,h: standardized object sizeextra: any number of additional category:score pairs which had an above-threshold score for that box

See Standardized serial messages formatting for more on standardized serial messages, and Helper functions to convert coordinates from camera resolution to standardized for more info on standardized coordinates.

| Parameter | Type | Description | Default | Valid Values |

|---|---|---|---|---|

| (DarknetYOLO) netin | cv::Size | Width and height (in pixels) of the neural network input layer, or [0 0] to make it match camera frame size. NOTE: for YOLO v3 sizes must be multiples of 32. | cv::Size(320, 224) | - |

| (Yolo) dataroot | std::string | Root path for data, config, and weight files. If empty, use the module's path. | JEVOIS_SHARE_PATH /darknet/yolo | - |

| (Yolo) datacfg | std::string | Data configuration file (if relative, relative to dataroot) | cfg/coco.data | - |

| (Yolo) cfgfile | std::string | Network configuration file (if relative, relative to dataroot) | cfg/yolov3-tiny.cfg | - |

| (Yolo) weightfile | std::string | Network weights file (if relative, relative to dataroot) | weights/yolov3-tiny.weights | - |

| (Yolo) namefile | std::string | Category names file, or empty to fetch it from the network config file (if relative, relative to dataroot) | - | |

| (Yolo) nms | float | Non-maximum suppression intersection-over-union threshold in percent | 45.0F | jevois::Range<float>(0.0F, 100.0F) |

| (Yolo) thresh | float | Detection threshold in percent confidence | 24.0F | jevois::Range<float>(0.0F, 100.0F) |

| (Yolo) hierthresh | float | Hierarchical detection threshold in percent confidence | 50.0F | jevois::Range<float>(0.0F, 100.0F) |

| (Yolo) threads | int | Number of parallel computation threads | 6 | jevois::Range<int>(1, 1024) |

params.cfg file# Parameters for module DarknetYOLO to allow for network selection # Tiny YOLO v2 trained on Pascal VOC (20 object categories): #datacfg=cfg/voc.data #cfgfile=cfg/yolov2-tiny-voc.cfg #weightfile=weights/yolov2-tiny-voc.weights #netin=320 240 # Tiny YOLO v3 trained on COCO (80 object categories): datacfg=cfg/coco.data cfgfile=cfg/yolov3-tiny.cfg weightfile=weights/yolov3-tiny.weights netin=160 120 |

| Detailed docs: | DarknetYOLO |

|---|---|

| Copyright: | Copyright (C) 2017 by Laurent Itti, iLab and the University of Southern California |

| License: | GPL v3 |

| Distribution: | Unrestricted |

| Restrictions: | None |

| Support URL: | http://jevois.org/doc |

| Other URL: | http://iLab.usc.edu |

| Address: | University of Southern California, HNB-07A, 3641 Watt Way, Los Angeles, CA 90089-2520, USA |