|

JeVois Tutorials

1.23

JeVois Smart Embedded Machine Vision Tutorials

|

|

|

JeVois Tutorials

1.23

JeVois Smart Embedded Machine Vision Tutorials

|

|

This tutorial will show you how to create a simple machine vision module that runs on the JeVois smart camera, using the JeVois Inventor graphical user interface.

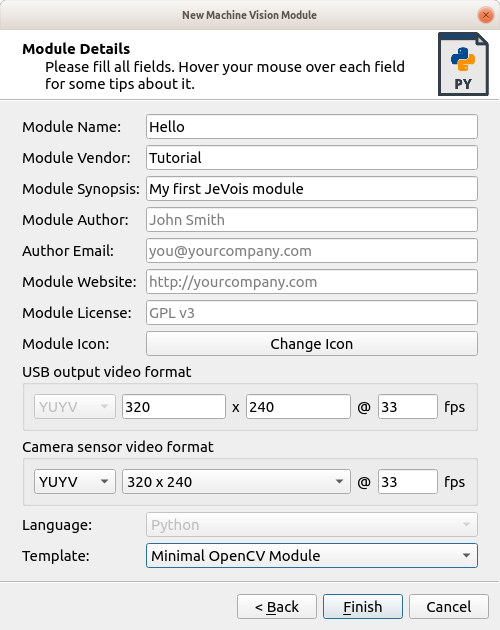

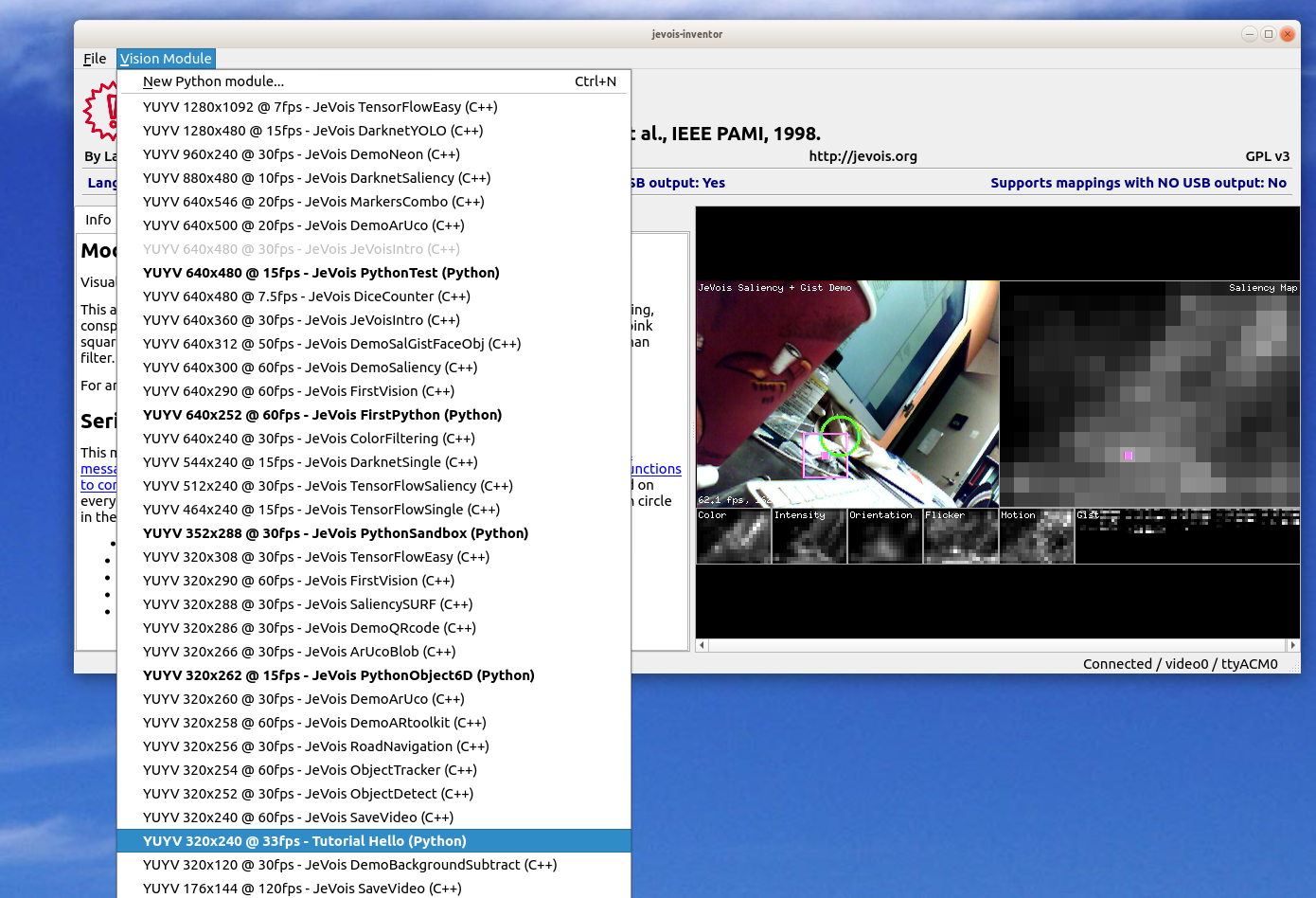

CTRL-N).Fill in the details as shown below:

A few notes:

For more information:

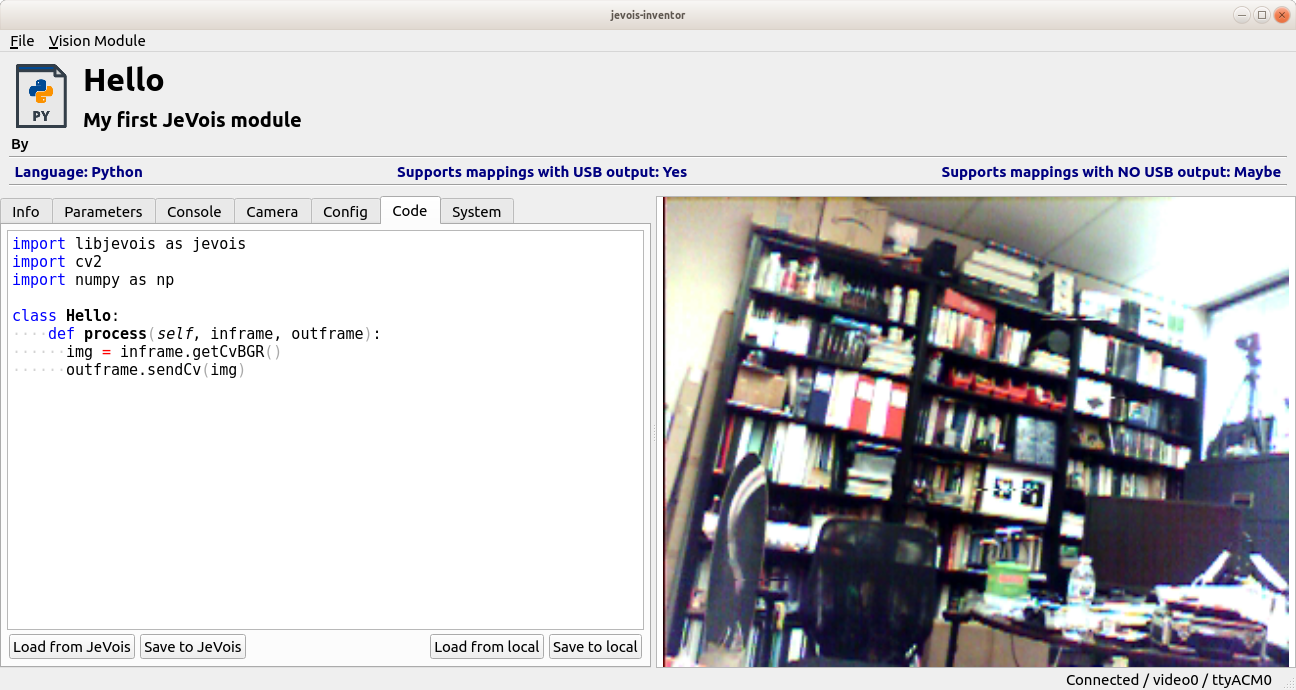

Switch to the Code tab of JeVois Inventor. You will see an editor for Python code.

JeVois supports full Python 3.6, numpy, and OpenCV 3.4.0.

As mentioned above, our mission is to compute something interesting in the video frames captured by the JeVois camera sensor, and to create some result video frames that we can send to a host computer over the USB link.

The JeVois core software that runs in the smart camera takes care of all the hard details of capturing images from the sensor and of sending output images to the USB link. So what is left for us is to focus on the transformation from an input image to an output image.

A module that does not change the image, hence making JeVois behave like a regular USB webcam, would look like this:

The process() function of class Hello will be called by the JeVois core, for every video frame.

The parameter inframe of process() is a proxy to the next video frame from the camera. It allows us to request, possibly wait for, and eventually obtain the next frame captured by the camera sensor.

Likewise, the parameter outframe is a proxy to the next frame that will be sent to the host computer over the USB link.

In the above code, we basically:

Try it for yourself:

CTRL-A and delete it with DELCTRL-S

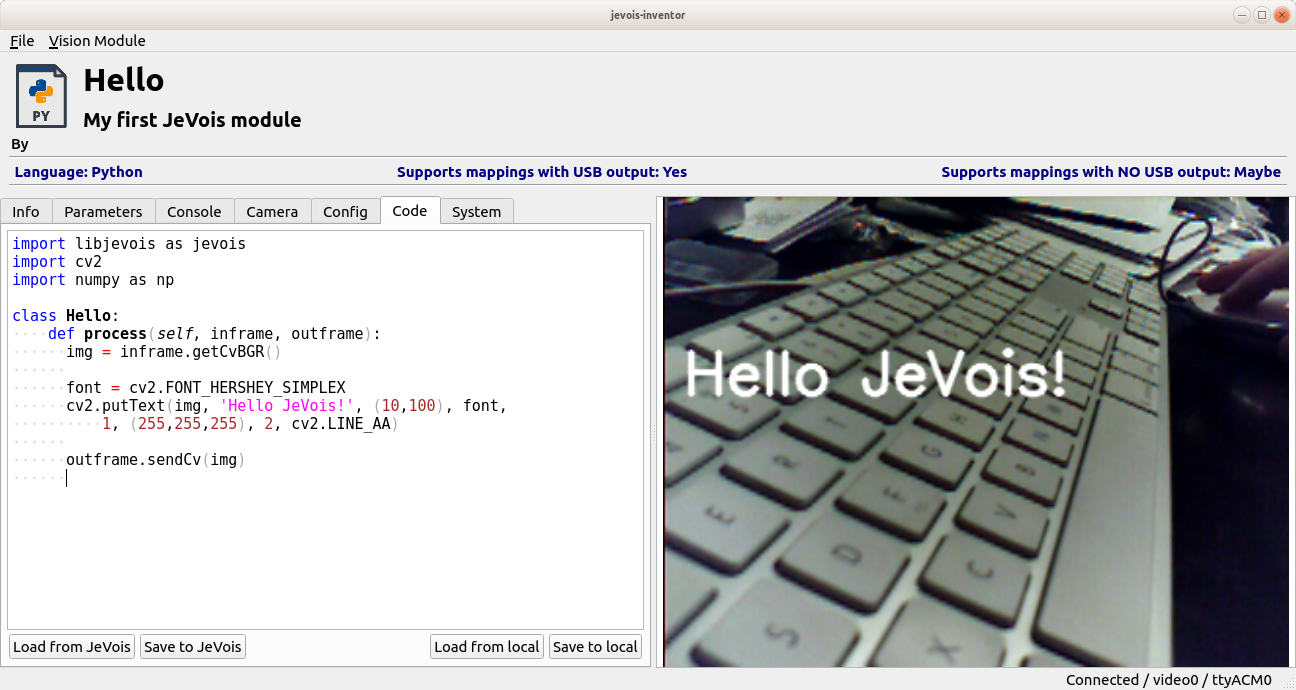

To start transitioning from plain to smart camera, let us see how we can make the output image different than the input image.

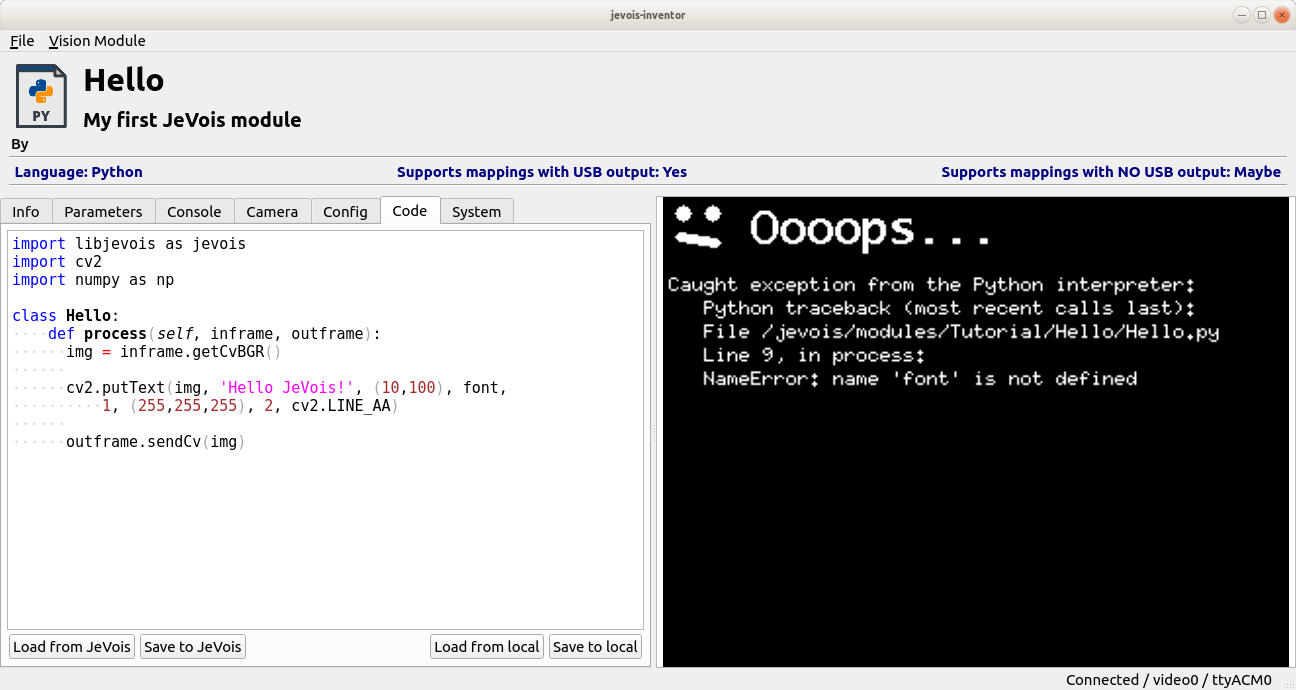

No worries, JeVois will catch your errors and display them in the video output. For example, delete the line

and save to JeVois. You should see:

Now, please read the entire message fully before you ask us questions. Here, the error is clearly explained on the last line of the error message:

NameError: name 'font' is not defined

Paste the line you deleted back in its proper place, save to JeVois, and you should see the module working again.

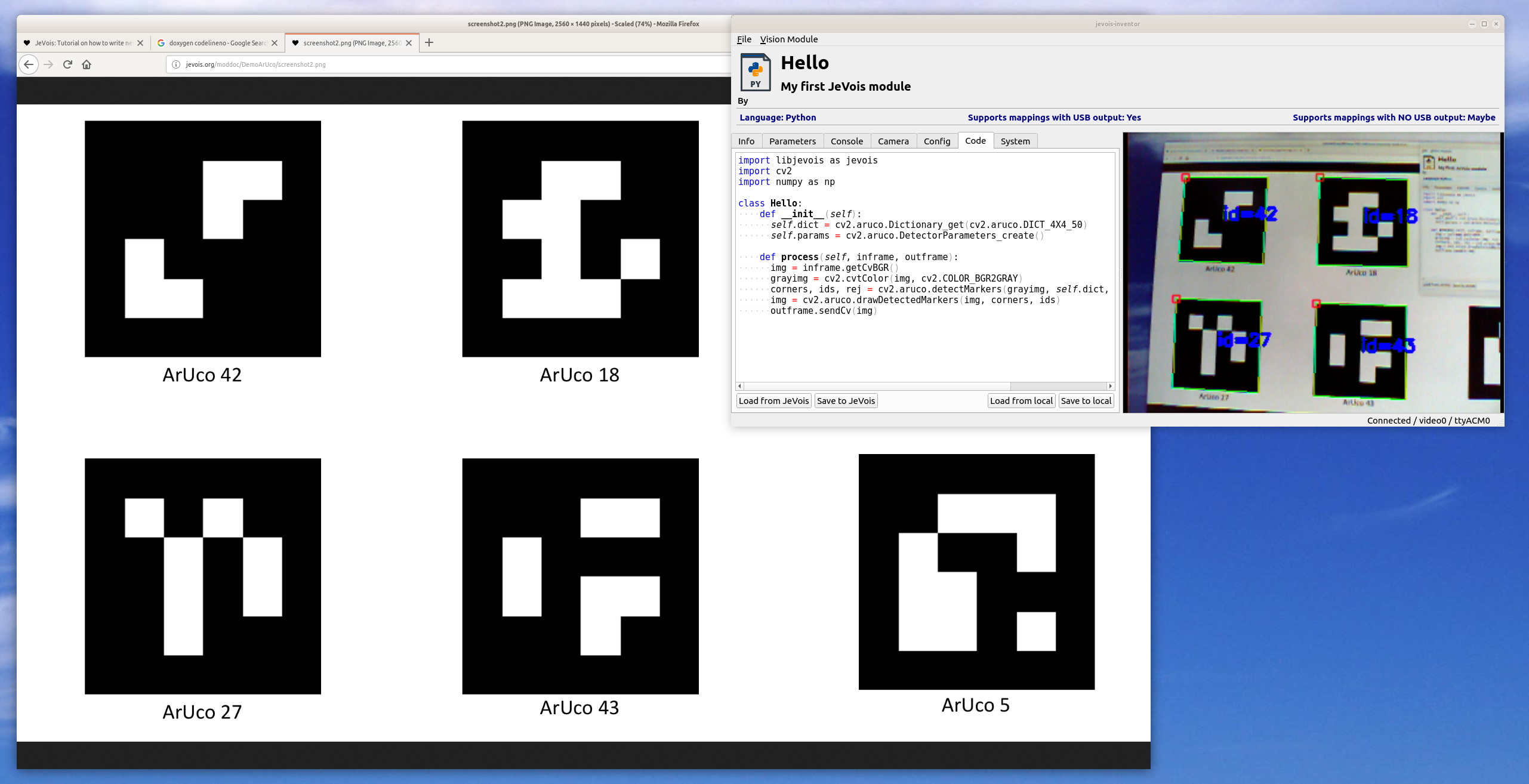

That is easy: ArUco tag detection is built into OpenCV. You just need 5 lines of new code to create that demo (they come from a quick web search for opencv aruco python):

Show it some ArUcos, for example those from the screenshots of the JeVois DemoArUco module.

For more info, see https://docs.opencv.org/3.1.0/d5/dae/tutorial_aruco_detection.html

You are ready to write your own powerful machine vision modules for JeVois!

For further reading:

1.9.8

1.9.8