|

| By Laurent Itti | itti@usc.edu | http://jevois.org | GPL v3 |

| |||

| Video Mapping: NONE 0 0 0.0 YUYV 640 480 15.0 JeVois MultiDNN | |||

| Video Mapping: YUYV 640 498 15.0 YUYV 640 480 15.0 JeVois MultiDNN |

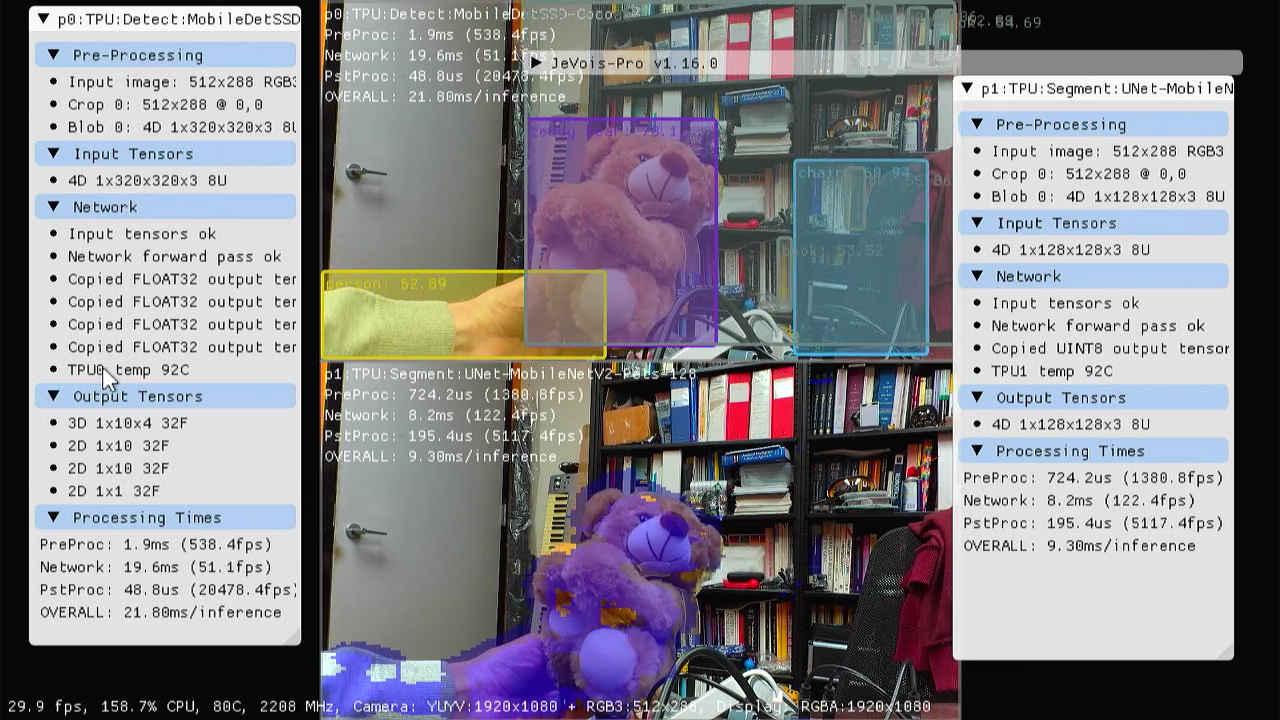

Module Documentation

See DNN for more details about the JeVois DNN framework.

Edit the module's params.cfg file to select which models to run.

You can load any model at runtime by setting the pipe parameter for each pipeline.

| Parameter | Type | Description | Default | Valid Values |

|---|---|---|---|---|

| (MultiDNN) grid | cv::Size | Grid of networks to run and display | cv::Size(2, 2) | - |

params.cfg file# Select width and height of grid: grid=2 2 # Pipelines are named p0 (top left corner), p1, p2, ... in a horizontal raster scan on screen. For each pipeline, you # must first set its zoo parameter to a model zoo file (default: models.yml), otherwise it will be left # unconfigured. Then, select a pipeline from that zoo file by setting the corresponding pipe parameter: p0:zoo=models.yml p0:pipe=OpenCV:YuNet:YuNet-Face-512x288 p0:processing=Async p1:zoo=models.yml p1:pipe=NPU:Detect:yolov8n-seg-512x288 p1:processing=Async p2:zoo=models.yml p2:pipe=NPU:Detect:yolo11n-512x288 p2:processing=Async p3:zoo=models.yml p3:pipe=NPU:Pose:yolov8n-pose-512x288 p3:processing=Async # or, if you have a Myriad-X: #p3:pipe=VPU:Detect:pedestrian-and-vehicle-detector-adas-0001 |

| Detailed docs: | MultiDNN |

|---|---|

| Copyright: | Copyright (C) 2018 by Laurent Itti, iLab and the University of Southern California |

| License: | GPL v3 |

| Distribution: | Unrestricted |

| Restrictions: | None |

| Support URL: | http://jevois.org/doc |

| Other URL: | http://iLab.usc.edu |

| Address: | University of Southern California, HNB-07A, 3641 Watt Way, Los Angeles, CA 90089-2520, USA |