|

| By Laurent Itti | itti@usc.edu | http://jevois.org | GPL v3 |

| |||

| Video Mapping: NONE 0 0 0.0 YUYV 640 480 15.0 JeVois DNN | |||

| Video Mapping: YUYV 640 498 15.0 YUYV 640 480 15.0 JeVois DNN |

Module Documentation

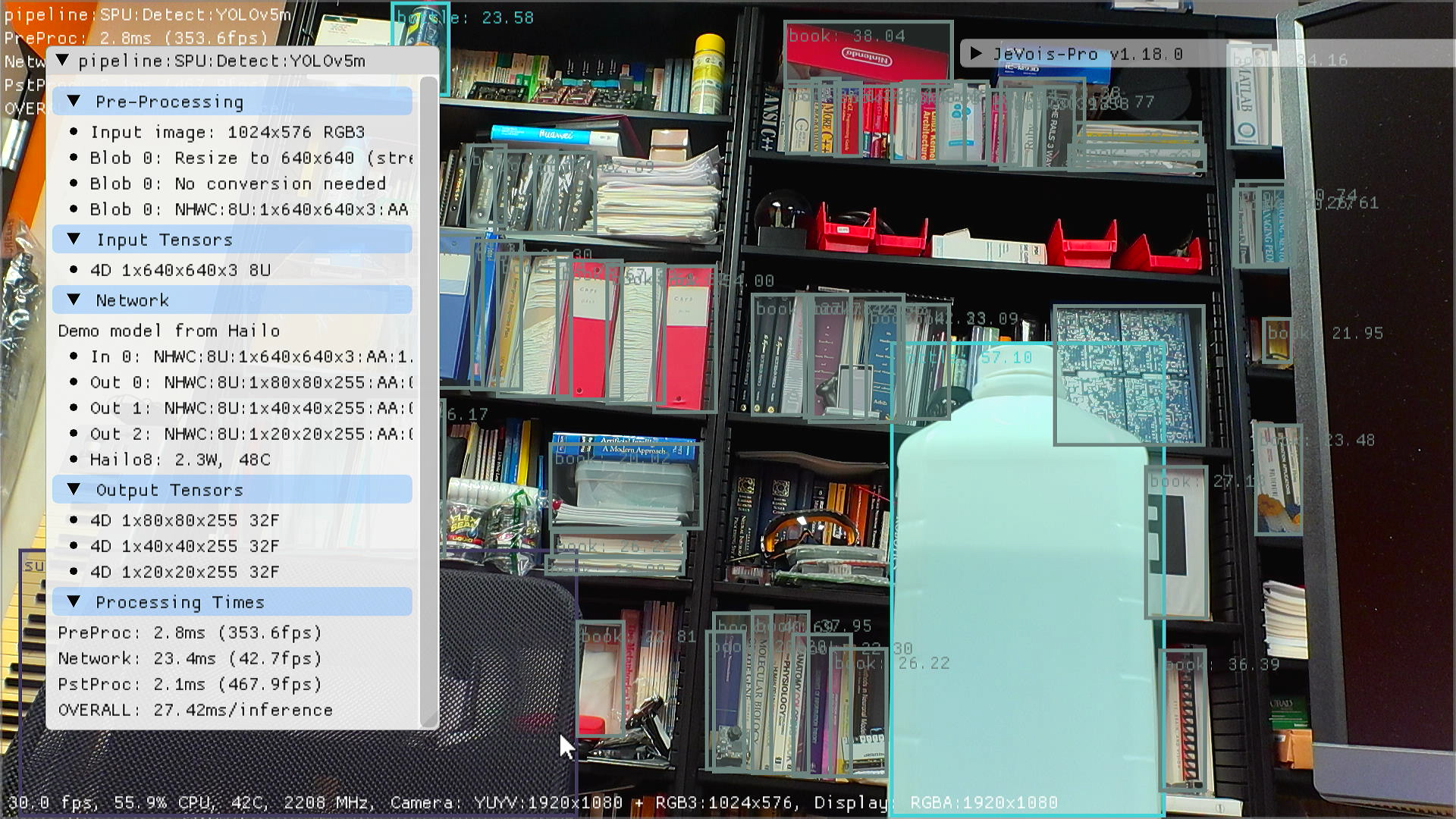

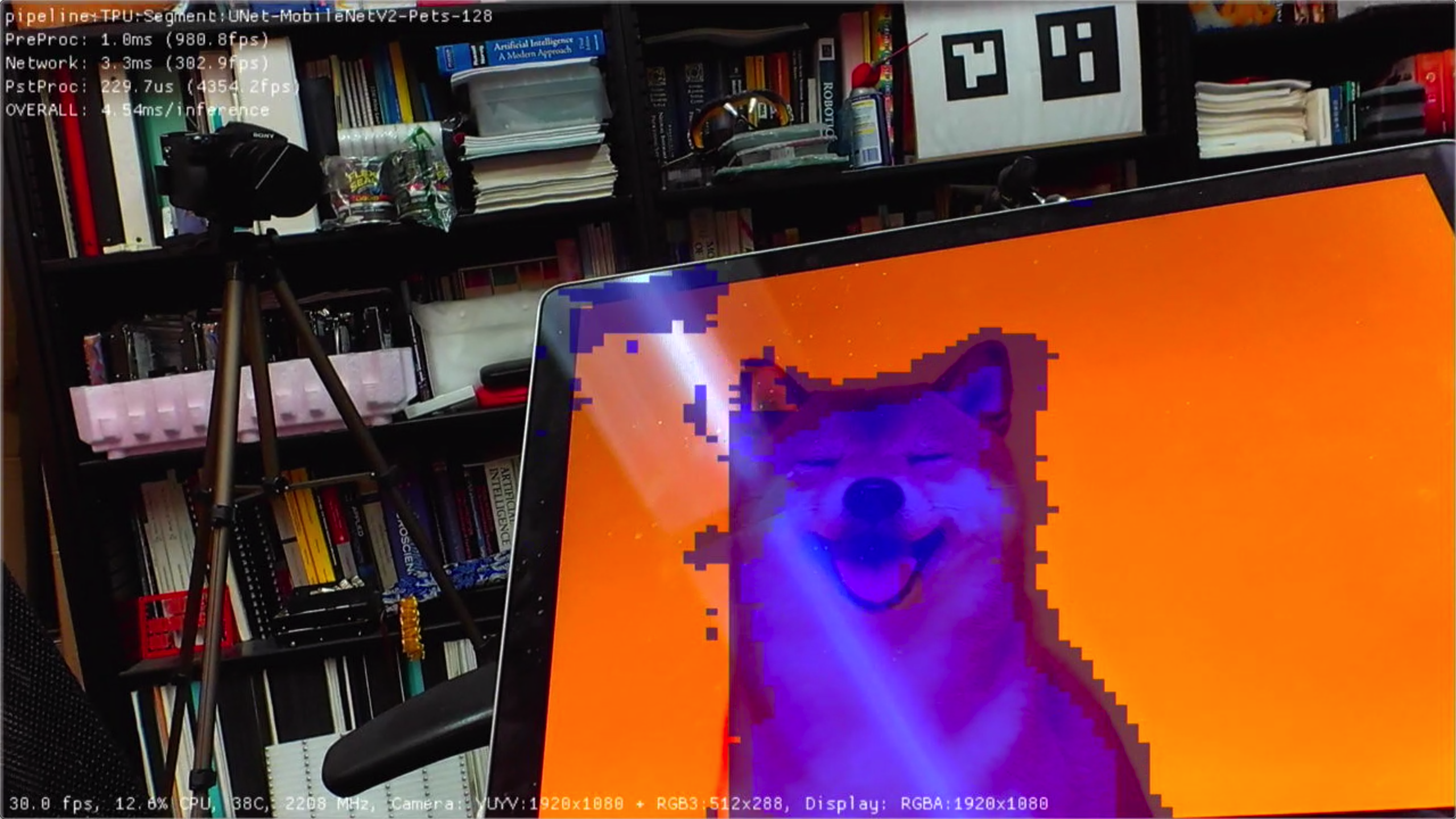

This module runs a deep neural network using the OpenCV DNN library. Classification networks try to identify the whole object or scene in the field of view, and return the top scoring object classes. Detection networks analyze a scene and produce a number of bounding boxes around detected objects, together with identity labels and confidence scores for each detected box. Semantic segmentation networks create a pixel-by-pixel mask which assigns a class label to every location in the camera view.

To select a network, see parameter pipe of component Pipeline.

The following keys are used in the JeVois-Pro GUI (pipe parameter of Pipeline component):

- OpenCV: network loaded by OpenCV DNN framework and running on CPU.

- ORT: network loaded by ONNX-Runtime framework and running on CPU.

- NPU: network running native on the JeVois-Pro integrated 5-TOPS NPU (neural processing unit).

- SPU: network running on the optional 26-TOPS Hailo8 SPU accelerator (stream processing unit).

- TPU: network running on the optional 4-TOPS Google Coral TPU accelerator (tensor processing unit).

- VPU: network running on the optional 1-TOPS MyriadX VPU accelerator (vector processing unit).

- NPUX: network loaded by OpenCV and running on NPU via the TIM-VX OpenCV extension. To run efficiently, network should have been quantized to int8, otherwise some slow CPU-based emulation will occur.

- VPUX: network optimized for VPU but running on CPU if VPU is not available. Note that VPUX entries are automatically created by scanning all VPU entries and changing their target from Myriad to CPU, if a VPU accelerator is not detected. If a VPU is detected, then VPU models are listed and VPUX ones are not. VPUX emulation runs on the JeVois-Pro CPU using the Arm Compute Library to provide efficient implementation of various network layers and operations.

For expected network speed, see JeVois-Pro Deep Neural Network Benchmarks

Serial messages

For classification networks, when object classes are found with confidence scores above thresh, a message containing up to top category:score pairs will be sent per video frame. Exact message format depends on the current serstyle setting and is described in Standardized serial messages formatting. For example, when serstyle is Detail, this module sends:

DO category:score category:score ... category:score

where category is a category name (from namefile) and score is the confidence score from 0.0 to 100.0 that this category was recognized. The pairs are in order of decreasing score.

See Standardized serial messages formatting for more on standardized serial messages, and Helper functions to convert coordinates from camera resolution to standardized for more info on standardized coordinates.

For object detection networks, when detections are found which are above threshold, one message will be sent for each detected object (i.e., for each box that gets drawn when USB outputs are used), using a standardized 2D message:

- Serial message type: 2D

id: the category of the recognized object, followed by ':' and the confidence score in percentx,y, or vertices: standardized 2D coordinates of object center or cornersw,h: standardized object sizeextra: any number of additional category:score pairs which had an above-threshold score for that box

See Standardized serial messages formatting for more on standardized serial messages, and Helper functions to convert coordinates from camera resolution to standardized for more info on standardized coordinates.

| Parameter | Type | Description | Default | Valid Values |

|---|---|---|---|---|

| This module exposes no parameter | ||||

| Detailed docs: | DNN |

|---|---|

| Copyright: | Copyright (C) 2018 by Laurent Itti, iLab and the University of Southern California |

| License: | GPL v3 |

| Distribution: | Unrestricted |

| Restrictions: | None |

| Support URL: | http://jevois.org/doc |

| Other URL: | http://iLab.usc.edu |

| Address: | University of Southern California, HNB-07A, 3641 Watt Way, Los Angeles, CA 90089-2520, USA |