|

JeVois Tutorials

1.23

JeVois Smart Embedded Machine Vision Tutorials

|

|

|

JeVois Tutorials

1.23

JeVois Smart Embedded Machine Vision Tutorials

|

|

The core JeVois software provides a number of helper classes to achieve things that are often useful in machine vision modules. One of them is a Timer class that computes average frames/s of processing, and adds some information about CPU usage, temperature, and frequency.

The jevois::Timer class is designed to be very simple to use. It has two functions, start() and stop(). From the Timer documentation:

This class reports the time spent between start() and stop(), at specified intervals. Because JeVois modules typically work at video rates, this class only reports the average time after some number of iterations through start() and stop(). Thus, even if the time of an operation between start() and stop() is only a few microseconds, by reporting it only every 100 frames one will not slow down the overall framerate too much. See Profiler for a class that provides additional checkpoints between start() and stop().

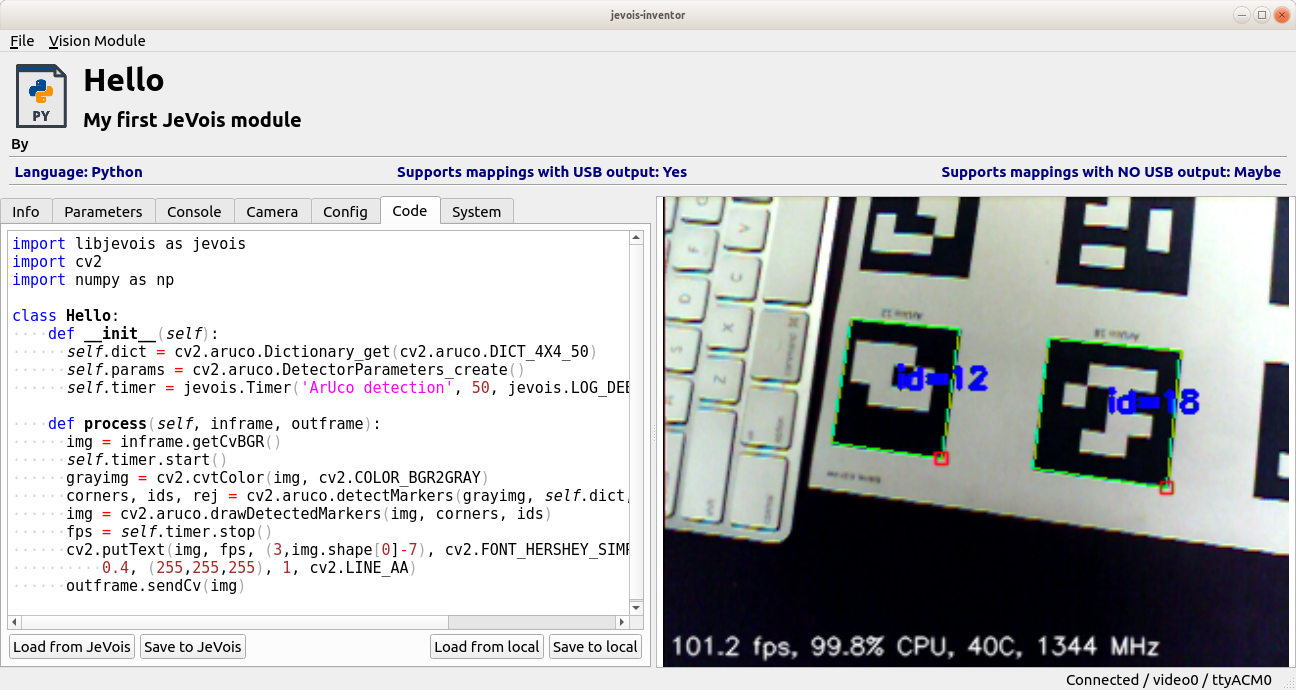

Here, we will start from where we ended in ProgrammerInvHello: a simple ArUco tag detector written in Python + OpenCV using JeVois Inventor.

If you have not yet done so, create a new module called Hello now, as follows:

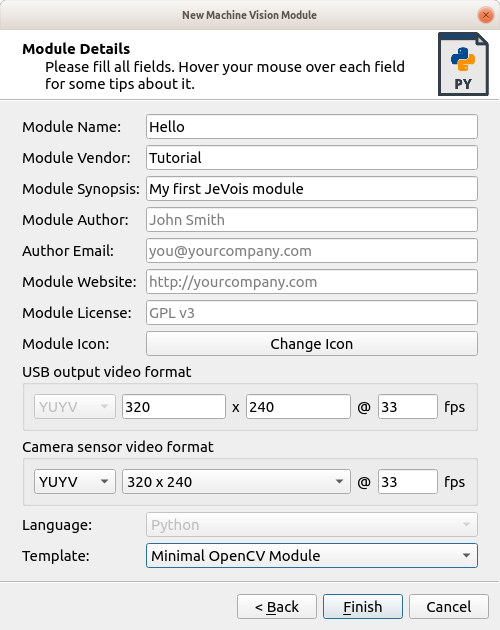

CTRL-N).Fill in the details as shown below:

See Hello JeVois using JeVois Inventor for more details.

We add a timer to our ArUco detector as follows:

__init__() function), instantiate a Timerstop() function returns a pre-formatted string with frames/s, CPU, etc info, so we can just write that into our output image using cv2.putText()

A few notes:

start() and stop(). Launch DarknetSingle and enable log outputs (in JeVois Inventor, switch to the Console tab and toggle log messages to USB) to see how it can be used, for example, to measure time spent computing each layer of a multi-layer neural network. Every few seconds, you will get a report of time spent computing each layer.  1.9.8

1.9.8