|

| By Laurent Itti | itti@usc.edu | http://jevois.org | GPL v3 |

| |||

| Video Mapping: NONE 0 0 0.0 YUYV 320 240 15.0 JeVois TensorFlowSaliency | |||

| Video Mapping: YUYV 448 240 30.0 YUYV 320 240 30.0 JeVois TensorFlowSaliency # recommended network size 128x128 | |||

| Video Mapping: YUYV 512 240 30.0 YUYV 320 240 30.0 JeVois TensorFlowSaliency # recommended network size 192x192 | |||

| Video Mapping: YUYV 544 240 30.0 YUYV 320 240 30.0 JeVois TensorFlowSaliency # recommended network size 224x224 | |||

| Video Mapping: YUYV 544 240 30.0 YUYV 320 240 30.0 JeVois TensorFlowSaliency |

Module Documentation

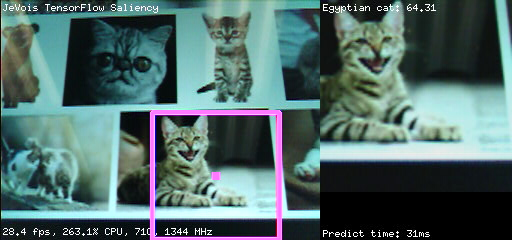

TensorFlow is a popular neural network framework. This module first finds the most conspicuous (salient) object in the scene, then identifies it using a deep neural network. It returns the top scoring candidates.

See http://ilab.usc.edu/bu/ for more information about saliency detection, and https://www.tensorflow.org for more information about the TensorFlow deep neural network framework.

This module runs a TensorFlow network on an image window around the most salient point and shows the top-scoring results. We alternate, on every other frame, between updating the salient window crop location, and predicting what is in it. Actual network inference speed (time taken to compute the predictions on one image crop) is shown at the bottom right. See below for how to trade-off speed and accuracy.

Note that by default this module runs fast variant of MobileNets trained on the ImageNet dataset. There are 1000 different kinds of objects (object classes) that this network can recognize (too long to list here). It is possible to use bigger and more complex networks, but it will likely slow down the framerate.

For more information about MobileNets, see https://github.com/tensorflow/models/blob/master/research/slim/nets/mobilenet_v1.md

For more information about the ImageNet dataset used for training, see http://www.image-net.org/challenges/LSVRC/2012/

Sometimes this module will make mistakes! The performance of mobilenets is about 40% to 70% correct (mean average precision) on the test set, depending on network size (bigger networks are more accurate but slower).

Neural network size and speed

This module provides a parameter, foa, which determines the size of a region of interest that is cropped around the most salient location. This region will then be rescaled, if needed, to the neural network's expected input size. To avoid wasting time rescaling, it is hence best to select an foa size that is equal to the network's input size.

The network actual input size varies depending on which network is used; for example, mobilenet_v1_0.25_128_quant expects 128x128 input images, while mobilenet_v1_1.0_224 expects 224x224. We automatically rescale the cropped window to the network's desired input size. Note that there is a cost to rescaling, so, for best performance, you should match foa size to the network input size.

For example:

- mobilenet_v1_0.25_128_quant (network size 128x128), runs at about 8ms/prediction (125 frames/s).

- mobilenet_v1_0.5_128_quant (network size 128x128), runs at about 18ms/prediction (55 frames/s).

- mobilenet_v1_0.25_224_quant (network size 224x224), runs at about 24ms/prediction (41 frames/s).

- mobilenet_v1_1.0_224_quant (network size 224x224), runs at about 139ms/prediction (7 frames/s).

When using video mappings with USB output, irrespective of foa, the crop around the most salient image region (with size given by foa) will always also be rescaled so that, when placed to the right of the input image, it fills the desired USB output dims. For example, if camera mode is 320x240 and USB output size is 544x240, then the attended and recognized object will be rescaled to 224x224 (since 224 = 544-320) for display purposes only. This is so that one does not need to change USB video resolution while playing with different values of foa live.

Serial messages

On every frame where detection results were obtained that are above thresh, this module sends a standardized 2D message as specified in Standardized serial messages formatting

- Serial message type: 2D

id: top-scoring category name of the recognized object, followed by ':' and the confidence score in percentx,y, or vertices: standardized 2D coordinates of object center or cornersw,h: standardized object sizeextra: any number of additional category:score pairs which had an above-threshold score, in order of decreasing score where category is the category name (fromnamefile) and score is the confidence score from 0.0 to 100.0

See Standardized serial messages formatting for more on standardized serial messages, and Helper functions to convert coordinates from camera resolution to standardized for more info on standardized coordinates.

Using your own network

For a step-by-step tutorial, see Training custom TensorFlow networks for JeVois.

This module supports RGB or grayscale inputs, byte or float32. You should create and train your network using fast GPUs, and then follow the instruction here to convert your trained network to TFLite format:

https://www.tensorflow.org/lite/

Then you just need to create a directory under JEVOIS:/share/tensorflow/ with the name of your network, and, in there, two files, labels.txt with the category labels, and model.tflite with your model converted to TensorFlow Lite (flatbuffer format). Finally, edit JEVOIS:/modules/JeVois/TensorFlowEasy/params.cfg to select your new network when the module is launched.

| Parameter | Type | Description | Default | Valid Values |

|---|---|---|---|---|

| (TensorFlowSaliency) foa | cv::Size | Width and height (in pixels) of the focus of attention. This is the size of the image crop that is taken around the most salient location in each frame. The foa size must fit within the camera input frame size. To avoid rescaling, it is best to use here the size that the deep network expects as input. | cv::Size(128, 128) | - |

| (TensorFlow) netdir | std::string | Network to load. This should be the name of a directory within JEVOIS:/share/tensorflow/ which should contain two files: model.tflite and labels.txt | mobilenet_v1_224_android_quant_2017_11_08 | - |

| (TensorFlow) dataroot | std::string | Root path for data, config, and weight files. If empty, use the module's path. | JEVOIS_SHARE_PATH /tensorflow | - |

| (TensorFlow) top | unsigned int | Max number of top-scoring predictions that score above thresh to return | 5 | - |

| (TensorFlow) thresh | float | Threshold (in percent confidence) above which predictions will be reported | 20.0F | jevois::Range<float>(0.0F, 100.0F) |

| (TensorFlow) threads | int | Number of parallel computation threads, or 0 for auto | 4 | jevois::Range<int>(0, 1024) |

| (TensorFlow) scorescale | float | Scaling factors applied to recognition scores, useful for InceptionV3 | 1.0F | - |

| (Saliency) cweight | byte | Color channel weight | 255 | - |

| (Saliency) iweight | byte | Intensity channel weight | 255 | - |

| (Saliency) oweight | byte | Orientation channel weight | 255 | - |

| (Saliency) fweight | byte | Flicker channel weight | 255 | - |

| (Saliency) mweight | byte | Motion channel weight | 255 | - |

| (Saliency) centermin | size_t | Lowest (finest) of the 3 center scales | 2 | - |

| (Saliency) deltamin | size_t | Lowest (finest) of the 2 center-surround delta scales | 3 | - |

| (Saliency) smscale | size_t | Scale of the saliency map | 4 | - |

| (Saliency) mthresh | byte | Motion threshold | 0 | - |

| (Saliency) fthresh | byte | Flicker threshold | 0 | - |

| (Saliency) msflick | bool | Use multiscale flicker computation | false | - |

params.cfg file# Config for TensorFlowSaliency. Just uncomment the network you want to use: # The default network provided with TensorFlow Lite: #netdir=mobilenet_v1_224_android_quant_2017_11_08 #foa=224 224 # All mobilenets with different input sizes, compression levels, and quantization. See # https://github.com/tensorflow/models/blob/master/research/slim/nets/mobilenet_v1.md # for some info on how to pick one. #netdir=mobilenet_v1_0.25_128 #foa=128 128 #netdir=mobilenet_v1_0.25_128_quant #foa=128 128 #netdir=mobilenet_v1_0.25_16 #foa=160 160 #netdir=mobilenet_v1_0.25_160_quant #foa=160 160 #netdir=mobilenet_v1_0.25_192 #foa=192 192 #netdir=mobilenet_v1_0.25_192_quant #foa=192 192 #netdir=mobilenet_v1_0.25_224 #foa=224 224 #netdir=mobilenet_v1_0.25_224_quant #foa=224 224 #netdir=mobilenet_v1_0.5_128 #foa=128 128 netdir=mobilenet_v1_0.5_128_quant foa=128 128 #netdir=mobilenet_v1_0.5_160 #foa=160 160 #netdir=mobilenet_v1_0.5_160_quant #foa=160 160 #netdir=mobilenet_v1_0.5_192 #foa=192 192 #netdir=mobilenet_v1_0.5_192_quant #foa=192 192 #netdir=mobilenet_v1_0.5_224 #foa=224 224 #netdir=mobilenet_v1_0.5_224_quant #foa=224 224 #netdir=mobilenet_v1_0.75_128 #foa=128 128 #netdir=mobilenet_v1_0.75_128_quant #foa=128 128 #netdir=mobilenet_v1_0.75_160 #foa=160 160 #netdir=mobilenet_v1_0.75_160_quant #foa=160 160 #netdir=mobilenet_v1_0.75_192 #foa=192 192 #netdir=mobilenet_v1_0.75_192_quant #foa=192 192 #netdir=mobilenet_v1_0.75_224 #foa=224 224 #netdir=mobilenet_v1_0.75_224_quant #foa=224 224 #netdir=mobilenet_v1_1.0_128 #foa=128 128 #netdir=mobilenet_v1_1.0_128_quant #foa=128 128 #netdir=mobilenet_v1_1.0_160 #foa=160 160 #netdir=mobilenet_v1_1.0_160_quant #foa=160 160 #netdir=mobilenet_v1_1.0_192 #foa=192 192 #netdir=mobilenet_v1_1.0_192_quant #foa=192 192 #netdir=mobilenet_v1_1.0_224 #foa=224 224 #netdir=mobilenet_v1_1.0_224_quant #foa=224 224 # Quite slow but accurate, about 4s/prediction, and scores seem out of scale somehow: #netdir=inception_v3_slim_2016_android_2017_11_10 #scorescale=0.07843 #foa=299 299 |

| Detailed docs: | TensorFlowSaliency |

|---|---|

| Copyright: | Copyright (C) 2018 by Laurent Itti, iLab and the University of Southern California |

| License: | GPL v3 |

| Distribution: | Unrestricted |

| Restrictions: | None |

| Support URL: | http://jevois.org/doc |

| Other URL: | http://iLab.usc.edu |

| Address: | University of Southern California, HNB-07A, 3641 Watt Way, Los Angeles, CA 90089-2520, USA |